Pattern 29 (Data Transfer - Copy In/Copy Out)

FLASH animation of Data Transfer - Copy In/Copy Out pattern

Description

The ability of a process component to copy the values of a set of data elements from an external source (either within or outside the process environment) into its address space at the commencement of execution and to copy their final values back at completion.

Example

When the Review Witness Statements task commences, copy in the witness statement records and copy back any changes to this data at task completion.

Motivation

This facility provides components with the ability to make a local copy of data elements that can be referenced elsewhere in the process instance. This copy can then be utilised during execution and any changes that are made can be copied back at completion. It enables components to function independently of data changes and concurrency constraints that may occur in the broader process environment.

Overview

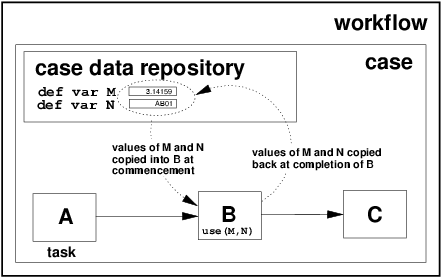

The manner in which this style of data passing operates is shown in Figure 18.

Figure 18: Data transfer - copy in/copy out

Context

There are no specific conditions associated with this pattern.

Implementation

Whilst not a widely supported data passing strategy, some offerings do offer limited support for it. In some cases, its use necessitates the adoption of the same naming strategy and structure for data elements within the component as used in the environment from which their values are copied. FLOWer utilises this strategy for accessing data from external databases via the InOut construct. XPDL adopts a similar strategy for data passing to and from subflows. XPDL adopts a similar strategy for data passing to and from subflows. BPMN supports this approach to data passing using Input- and OutputPropertyMaps howeverr this can only be utilized for data transfer to and from an IndependentSub-Process. iPlanet also support this style of data passing using parameters but it too is restricted to data transferred to and from subprocesses.

Issues

Difficulties can arise with this data transfer strategy where data elements are passed to a subprocess that executes independently (asynchronously) of the calling process. In this situation, the calling process continues to execute once the subprocess call has been made and this can lead to problems when the subprocess completes and the data elements are copied back to the calling process as the point at which this copy back will occur is indeterminate.

Solutions

There are two potential solutions to this problem:

- Do not allow asynchronous subprocess calls.

- In the event of asynchronous subprocess calls occuring, do not copy back data elements at task completion - this is the approach utilized by XPDL.

Evaluation Criteria

An offering achieves full support if it has a construct that satisfies the description for the pattern. It rates as partial support if there is any limitation on the data source that can be accessed in a copy in/copy out scenario or if this approach to data transfer can only be used in specific situations.

Product Evaluation

To achieve a + rating (direct support) or a +/- rating (partial support) the product should satisfy the corresponding evaluation criterion of the pattern. Otherwise a - rating (no support) is assigned.

Product/Language |

Version |

Score |

Motivation |

|---|---|---|---|

| Staffware | 9 | - | Not supported |

| Websphere MQ Workflow | 3.4 | - | Not supported |

| FLOWer | 3.0 | +/- | Supported via InOut mapping construct |

| COSA | 4.2 | - | Not supported |

| XPDL | 1.0 | +/- | As for 27 |

| BPEL4WS | 1.1 | - | Not supported |

| BPMN | 1.0 | +/- | Partially supported. It occurs when decomposition is realised through Independent Sub-Process. The data attributes to be copied into/out of the Independent Sub-Process are specified through the Input- and OutputPropertyMaps attributes |

| UML | 2.0 | - | Not supported |

| Oracle BPEL | 10.1.2 | + | Directly supported by means of two <assign> constructs |

| jBPM | 3.1.4 | + | Data is transferred in jBPM by using a Copy in/Copy out strategy. When dealing with concurrent tasks, this implies that at creation every work item receives a copy of the data values in the global process variables. Any updates to these values by a concurrent work item remains invisible outside that work item. At completion the values for each work item are copied back (if so specified) to the global process variables, overwriting the existing values for these variables. This means that the work item to comlete last will be the last one to copy its value back to the process variables. To avoid problems with lost updates, a process designer needs to be careful with granting write access to variables for tasks that run in parallel and update global data. |

| OpenWFE | 1.7.3 | + | OpenWFE is utilizing the Copy in/Copy out strategy when dealing with parallel tasks or multiple instance tasks. At creation, every work item receives a copy of the data values in the global vairabled. Any updates done on these values by a given work item instance remain invisible outside that work item. At completion the values associated with the work item (or one of them where the task is a multiple instance task) is copied back according to the strategy defined in the synchronization or multiple instances task construct, which may lead to lost update problems. To avoid this issue, filters can be used for individual parallel task in order to prevent simultaneous access to the same data element. However, filters can not be used to solve this issue for multiple instance tasks. |

| Enhydra Shark | 2 | + | Enhydra Shark uses the "copy-in" technique when invoking sub-processes. The values of variables are copied to sub-process variables through 1) specified parameters; or 2) local copies of the global variables. When the invocation is synchronous, after completion, the sub-process copies its variable values back to the calling process, which is done through parameters (the local variable copies are not exported back to the global process variables). If the invocation is asynchronous, the variable values are not copied back to the calling process (even if the data transfer is specified through parameters). In this way concurrent data update problems are avoided. Enhydra Shark also uses the "copy-in" technique when transferring data between activities and between activites and block activities. When completed an activity copies back its value to the global variable. When two activities that have copies of the same variables run concurrently and update the variable values, the updates are not visible for the concurrently running activity (as the variable copies are received at enablement). |

Summary of Evaluation

+ Rating |

+/- Rating |

|---|---|

|

|